In this part, we will use Hive to execute all the queries that we have been processing since the beginning of this series of tutorials.

In this part, we will use Hive to execute all the queries that we have been processing since the beginning of this series of tutorials.

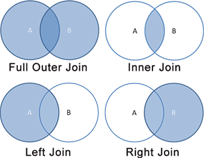

In nearly all parts, we have coded MapReduce jobs to solve specific types of queries (filtering, aggregation, sorting, joining, etc…). It was a good exercise to understand Hadoop/MapReduce internals and some distributed processing theory, but it required to write a lot of code. Hive can translate SQL queries into MapReduce jobs to get results of a query without needing to write any code.

We will start by installing Hive and setting up tables for our datasets, before executing our queries from previous parts and seeing if Hive can have better execution times than our hand-coded MapReduce jobs.